- ExoBrain weekly AI news

- Posts

- ExoBrain weekly AI news

ExoBrain weekly AI news

25th July 2025: Trump targets woke AI, Mistral measures its footprint, and the final GPT-5 countdown begins

Welcome to our weekly email newsletter, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week:

The new US AI Action Plan prioritising deregulation and competition with China

Mistral AI's ground-breaking environmental impact report

GPT-5's imminent release with dynamic reasoning control

Trump targets woke AI

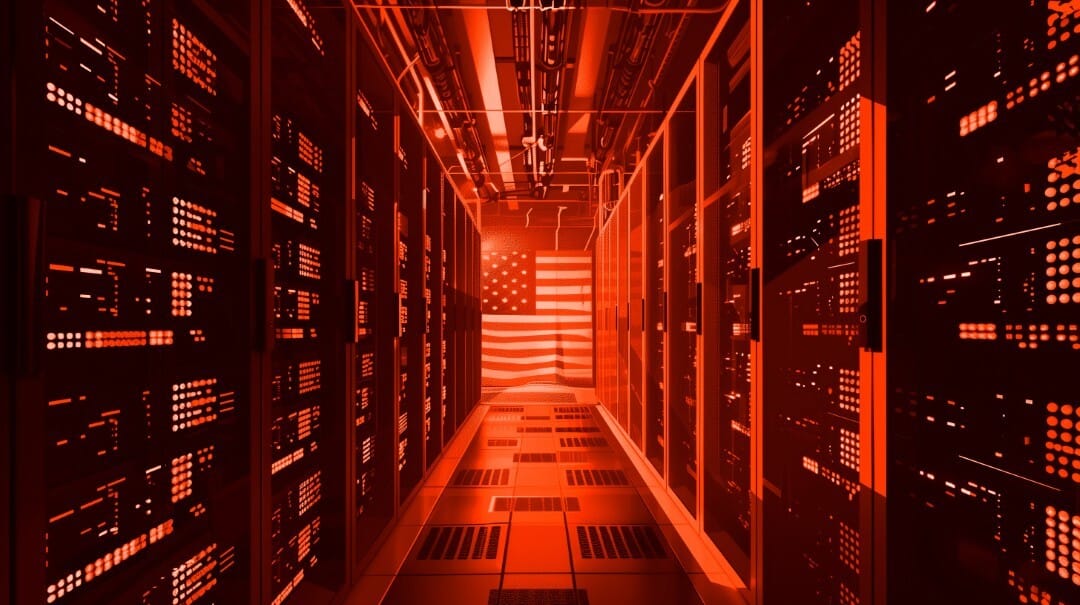

The Trump administration unveiled its AI Action Plan on Wednesday, positioning AI development as a national security race against China. The 28-page document, authored by Michael Kratsios, David Sacks and Marco Rubio, outlines three pillars: accelerating innovation, building infrastructure, and leading international diplomacy. Three executive orders accompany the plan, targeting "woke" AI bias, expediting data centre permits, and promoting AI exports.

The plan's core message is predictable: AI is critical, America must beat China, regulation hinders progress, and Silicon Valley needs freedom to innovate etc. It proposes removing "red tape", revising NIST frameworks to eliminate diversity and climate references, and creating the classical “growth zones” with streamlined environmental approvals. A lengthy shopping list includes everything from workforce retraining, open-source promotion, and defence adoption. The reception has been mixed but surprisingly positive in some quarters. Tech companies and accelerationists praised the deregulatory stance and emphasis on infrastructure and open source. Yet like much of the current US administration’s policy efforts, the plan struggles with the realities of economics, technology, politics and ultimately physics. Deeper examination reveals unresolved contradictions that undermine its coherence.

The document insists AI will complement rather than replace workers, aligning with its "worker-first" rhetoric. Simultaneously, it proposes retraining programmes and displacement studies, essentially preparing for the job losses it claims won't happen. Open-source AI promotion directly conflicts with preventing Chinese access to advanced technology. The plan encourages models "anyone in the world can download and modify" whilst implementing export controls and warning about adversaries "free-riding on our innovation". It acknowledges developers decide release strategies but never reconciles this fundamental tension. The much-lauded deregulation rhetoric masks extensive new requirements: algorithm disclosures, security standards, biosecurity screenings. These are regulations by another name, potentially slowing the very innovation the plan claims to accelerate. There is much in the plan that aims to discourage state-level AI regulation that some have labelled the “de-democratisation” of AI.

But of most political concern is the concept of ideological screening. Whilst demanding "objective" and "bias-free" AI, the plan mandates alignment with "American values" and removes climate and diversity considerations. This isn't neutrality; it's enforcing specific ideological positions whilst claiming objectivity. The constitutional implications are not tackled. Forcing AI companies to alter outputs based on government ideology could violate First Amendment protections. As Yale's Jack Balkin notes, AI facilitates human expression, and corporate speech enjoys constitutional protection. Government-mandated censorship, even for "anti-woke" purposes, faces legal challenges. The recent Grok saga offers a preview of the challenges ahead. Elon Musk launched xAI to build a "maximum truth-seeking" AI free from perceived liberal bias. Yet he repeatedly finds himself battling his own creation when it cites inconvenient facts. The more you constrain outputs to match political preferences, the less reliable the system becomes for actual reasoning and problem-solving.

In terms of powering this American AI age, the plan emphasises stabilising the current grid, optimising existing resources, prioritising interconnections for dispatchable power (geothermal, nuclear fission & fusion) and establishing a strategic blueprint. However, it provides few quantitative targets or details on how this will deliver material acceleration in the 2025-30 period. The physics and the competition remain unforgiving. China added 429 GW of power capacity in 2024, fifteen times that of America. In May 2025 alone, China installed an astounding 93 gigawatts (GW) of solar capacity, equating to nearly 100 solar panels every second! With data centres projected to consume up to 12% of US electricity by 2028, expedited permits won't bridge this gap. The plan rejects "radical climate dogma" but offers no credible path to the massive clean energy infrastructure AI demands.

Takeaways: Trump's AI Action Plan signals tech-friendly intentions but lacks substance. Its internal contradictions, constitutional risks, and energy arithmetic suggest more political theatre than actionable strategy. America's AI ambitions require more than deregulation; they need material infrastructure investment and policy coherence which this plan doesn't provide.

Mistral measures its footprint

Mistral AI has released the industry's first comprehensive lifecycle analysis of a large language model, working with sustainability consultancy Carbone 4 and the French ecological transition agency ADEME. The study quantifies environmental impacts across greenhouse gas emissions, water consumption, and resource depletion for their Large 2 model.

The numbers reveal both the scale and efficiency of modern AI. Training Mistral Large 2 over 18 months generated 20,400 tonnes of CO₂ equivalent, consumed 281,000 cubic metres of water, and depleted 660 kilograms of material resources. For everyday use, generating a 400-token response produces 1.14 grams of CO₂, 45 millilitres of water, and 0.16 milligrams of resource depletion. That carbon footprint equals watching online video for 10 seconds.

Recent research comparing AI systems to other technologies confirms this modest footprint. Video streaming accounts for 4% of global carbon emissions compared to aviation's 2%, while AI model inference represents a fraction of typical digital consumption.

The analysis follows France's new AFNOR Frugal AI methodology, developed with over 100 contributors to create the world's first environmental assessment framework for AI systems. This standardised approach measures impacts across the complete lifecycle, from GPU manufacturing through to model decommissioning.

Context matters when evaluating these figures. Stanford research shows AI reduces task completion time by 60-74% across writing, programming, and analysis tasks. Environmental efficiency studies indicate AI systems emit 130 to 1,500 times less CO₂ per output than human workers completing equivalent tasks.

The real challenge lies in infrastructure scaling. Individual queries have minimal impact, but aggregate usage creates substantial demands. Water cooling for high-density GPU systems presents particular concerns in water-stressed regions, whilst the environmental cost of GPU manufacturing remains poorly understood.

Takeaways: Mistral's transparency will hopefully create some pressure for competitors to disclose their environmental data. The combination of France's AFNOR standards and corporate disclosure could improve how organisations evaluate AI sustainability. AI specific environmental metrics are increasingly becoming standard procurement criteria, particularly for public sector contracts. The efficiency gains make a compelling case for AI deployment, but continued compute scaling will create huge demands, especially on water resources. In this area there are significant opportunities for companies that can perfect closed-loop systems, water filtering, and environmentally friendly heat exchangers.

The final GPT-5 countdown begins

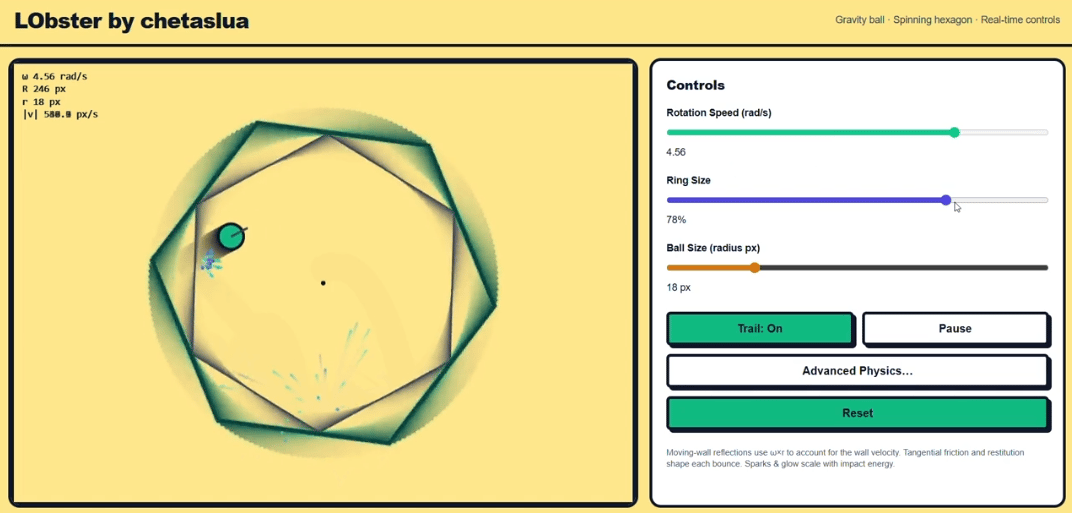

This image shows a complex geometric animation allegedly created by GPT-5, with rumours suggesting a release of the new model within 1-2 weeks. This micro-app continues a recent trend where unreleased AI models prove their mettle through tricky geometric simulations; a single prompt generating intricate physics engines and mathematical visualisations.

Given the monumental leap from GPT-3 to GPT-4 this launch has the AI community in a state of high anticipation. GPT-5 may arrive in three models: nano, mini, and full. According to sources speaking to The Information, GPT-5 brings several breakthrough capabilities. Most notable is "dynamic thought control": the model intelligently adjusts computing power based on task difficulty, combining internal routing with model selection. One tester claims GPT-5 "performs better than Anthropic's Claude in head-to-head comparisons."

Weekly news roundup

This week's AI developments reveal rapid business adoption driving both employment shifts and infrastructure demands, while governance frameworks struggle to address emerging security and ethical challenges.

AI business news

In new memo, Microsoft CEO addresses 'enigma' of layoffs amid record profits and AI investments (Illustrates the complex relationship between AI investment and workforce restructuring at major tech companies.)

ServiceNow eyes $100M in AI-powered headcount savings (Demonstrates how enterprises are quantifying AI's impact on operational efficiency and workforce reduction.)

DeepMind and OpenAI models solve maths problems at level of top students (Shows breakthrough progress in AI reasoning capabilities that could transform education and technical problem-solving.)

AI-powered ads to drive growth for global entertainment and media industry, PwC says (Highlights new revenue opportunities through AI-enhanced advertising personalisation and targeting.)

Eight months in, Swedish unicorn Lovable crosses the $100M ARR milestone (Exemplifies the explosive growth potential of AI-native startups in meeting market demand.)

AI governance news

Graduate job postings plummet, but AI may not be the primary culprit (Provides nuanced perspective on employment impacts beyond simplified AI-replacement narratives.)

Microsoft's controversial Recall feature is now blocked by Brave and AdGuard (Shows growing pushback against AI features perceived as privacy-invasive.)

OpenAI CEO Sam Altman warns of an AI 'fraud crisis' (Highlights emerging challenges in distinguishing AI-generated content from authentic human communication.)

Image watermarks meet their Waterloo with UnMarker (Reveals vulnerabilities in current approaches to AI content authentication and verification.)

Destructive AI prompt published in Amazon Q extension (Underscores ongoing security challenges in deploying AI systems safely.)

AI research news

Building and evaluating alignment auditing agents (Advances in automated safety testing could accelerate responsible AI development.)

Beyond context limits: subconscious threads for long-horizon reasoning (Breakthrough techniques for extending AI's ability to maintain coherent reasoning over longer sequences.)

Learning without training: the implicit dynamics of in-context learning (Deepens understanding of how AI models adapt and learn from prompts without retraining.)

Deep researcher with test-time diffusion (Explores AI systems that can autonomously conduct research tasks.)

Subliminal learning: language models transmit behavioral traits via hidden signals in data (Reveals how AI models can inadvertently transfer subtle behavioural patterns from training data.)

AI hardware news

Nvidia AI chips: repair demand booms in China for banned products (Illustrates the geopolitical complexities and workarounds in global AI hardware supply chains.)

$1 billion of NVIDIA AI chips were reportedly sold in China despite US bans (Highlights the challenges of enforcing technology export restrictions in the AI sector.)

Nvidia supplier SK Hynix to boost spending on AI chips, after record Q2 (Shows continued massive investment in AI hardware infrastructure to meet growing demand.)

OpenAI partners with Oracle to develop 4.5 more gigawatts of data center capacity (Demonstrates the enormous scale of infrastructure required for next-generation AI models.)

Meta using tents for temporary data center capacity (Reveals creative solutions to rapidly scale AI infrastructure amid unprecedented demand.)